Olympic Games Analytics Project in Apache Spark for beginner using Databricks (Unofficial). Databricks is smart and all, but how do you identify the path of your current notebook? The guide on the website does not help.It suggests:%scaladbutils.notebook.getContext.notebookPathres1. The first official book authored by the core R Markdown developers that provides a comprehensive and accurate reference to the R Markdown ecosystem. With R Markdown, you can easily create. Of the Databricks Cloud shards. Use your laptop and browser to login there. We find that cloud-based notebooks are a simple way to get started using Apache Spark – as the motto “Making Big Data Simple” states. Please create and run a variety of notebooks on your account throughout the tutorial. Disease Prediction 2 Projects in Apache Spark(ML) for beginners using Databricks Notebook (Unofficial) Community edition.

Databricks Magic Command

Notebooks are web pages that have an engine behind them to run code. Azure Databricks Notebooks are similar to IPython and Jupyter Notebooks. The engine is based on REPL or read-eval-print loop that parses our code, evaluates it for the interpreter, and prints any results. Notebooks are the main interface for building code within Azure Databricks.

To get started, on the main page of Azure Databricks click on New Notebook under Common Tasks. We are then prompted with a dialog box requesting a name and the type of language for the Notebook. These languages can be Python, Scala, SQL, or R. For this post we will use Python.

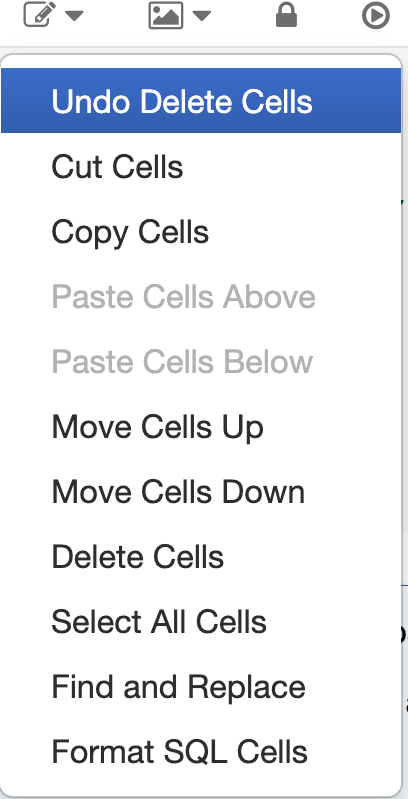

When you create a new notebook you will see the following. The title and type of notebook will be at the top along with a toolbar. Notebooks have cells that allow for text, images, and code to be entered. Each cell is executed separately in the REPL loop, and variables are able to be shared between executions.

For now let’s review the toolbar. The first icon on the toolbar is for attaching the notebook to a cluster. By default it will be detached as shown, unless you have a cluster up and running.

The second icon for file allows for copying, renaming, deleting, exporting, and clearing revision history. We will go over revision history in a latter post. For exporting we can choose DBC Archive, Source File, IPython Notebook, or HTML.

A DBC Archive file is a Databricks HTML notebook that is the HTML of the notebook and complied to a JAR file. This makes distributing notebooks easier between teams similar to zip files. A Source File will be either Python, Scala, SQL, or R file when exported.

The third icon is for Viewing Code or Results Only. It also allows for hiding and showing line numbers and command numbers. There is also an option to create a dashboard to display visualizations from a notebook. Remember the Cmd 1 and line number of 1 these can be hidden and shown using these commands.

Each cell can operate separately from others. One nice benefit of using notebooks and cells is magic commands. These are used to specify the language of choice for the remainder of the cell. These have to be the first characters in the cell to change the language, otherwise it will be the default in ( ) next to the title.

The supported languages are %python, %r, %scala, and %sql. There are also others that allow access to the shell (%sh), Databricks file system (%fs), and to create markdown (%md). Using Markdown allows integrating documentation along side of the code.

Notebooks allow for quick and easy development of code. With the ability to add documentation, images, and links it becomes a living document. In Azure Databricks, Notebooks are available to others assigned with proper permissions to shared and collaborate in real-time. There is also integration with GitHub for source control.

Databricks Notebook Markdown Free

In the next post we will review setting up different notebooks to create a project. This project is going to be use the well known Airline On-Time Performance data from the Bureau of Transportation Statistics.